Research Projects

National Science Foundation (NSF) CAREER – Award #2046118

Enabling Trustworthy Speech Technologies for Mental Healthcare |

|

|

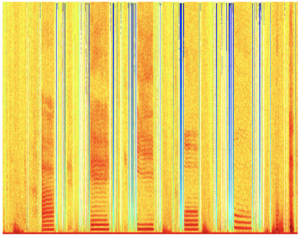

This research aims to design reliable machine learning, notably for speech-based diagnosis and monitoring of mental health, for addressing three pillars of trustworthiness: explainability, privacy preservation, and fair decision making. Trustworthiness is critical for both patients and clinicians: patients must be treated fairly and without the risk of reidentification, while clinical decision-making needs to rely on explainable and unbiased machine learning. Thus, this work (1) designs novel speaker anonymization algorithms that retain mental health information and suppress information related to the identity of the speaker; (2) improves explainability of speech-based models for tracking mental health through novel convolutional architectures that learn explainable spectrotemporal transformations relevant to speech production fundamentals; and (3) examines how bias in data and model design may perpetuate social disparities in mental health. Through a series of experiments this work further contributes to understanding ways in which human-machine partnerships are formed in mental healthcare settings along dimensions of trust formation, maintenance, and repair. You can find more information here.

Student researchers: Kexin Feng, Abdullah Aman Tutul, Ehsanul Haque Nirjhar, Vinesh Ravuri, Michael Yang |

Related Publications:

|

|

Air Force Office of Research (AFOSR) – FA9550-22-1-0010

Investigating Human Trust in AI: A Case Study of Human-AI Collaboration on a Speech-Based Data Analytics Task |

|

|

This research investigates trust in artificial intelligence (AI) during a human-in-the-loop collaborative speech-based data analytics task (DAT). Our work examines dimensions of trust during a human-AI collaboration paradigm that involves a speech-based DAT, in which human users will be called to collaborate with an AI system in detecting deceptive/truthful speech, a challenging DAT of high relevance to AFRL and the military. The objectives of this work are to: (1) Investigate dimensions of trust in human-AI collaborative DAT; (2) Identify human and system-related factors of trust in AI; and (3) Build an evidence-based model of human trust in AI and its effect on human-AI teaming outcomes.

Student researchers: Abdullah Aman Tutul |

Related Publications:

|

|

National Aeronautics and Space Administration (NASA) – #80NSSC22K0775

Artificial intelligence for tracking micro-behaviors in longitudinal data and predicting their effect on well-being and team performance |

|

|

Future long-distance space exploration will have a number of challenges that increase the risk of inadequate cooperation, coordination and psychosocial adaptation, and can lead to behavioral health and performance decrements. Micro-behaviors detected by artificial intelligence (AI) have the potential to provide unique insights into emotional reactivity and operationally-relevant team performance, beyond self-report team functioning measures commonly used in NASA-funded research. Our research leverages advanced multimodal data analytics to detect micro-behaviors, including micro-aggressions, micro-conflicts, and micro-affirmations. It further identifies emotional reactivity to micro-behaviors, and examines the effect of micro-behaviors on operationally relevant team functioning.

Student researchers: Projna Paromita |

Related Publications:

|

|

National Science Foundation (NSF) S&CC – Award #2126045

Digital Twin City for Age-friendly Communities – Crowd-biosensing of Environmental Distress for Older Adults |

|

|

Neighborhood environments in most communities can be the source of significant physical and emotional distress to older adults, thereby inhibiting their mobility and outdoor physical activity. This project thus aims to (1) create a digital twin city (DTC) model that reveals older adults’ collective distress and associated environmental conditions, and (2) leverage the DTC model to develop and implement technological and environmental interventions that alleviate such distress and promote older adults’ independent mobility and physical activity. The DTC model is constructed by matching or twinning crowdsourced biosignals with street-level visual data from participatory sensing and Google Street View, enabling the establishment of a city’s affective map. The project leverages the DTC model to design, implement, and/or evaluate stress-responsive interventions, in collaboration with local stakeholders and older adults in an underserved neighborhood in Houston, TX.

Postdoctoral researchers: Jinwoo Kim Student researchers: Raquel Yupanqui, Ehsanul Haque Nirjhar |

Related Publications:

|

|

National Science Foundation (NSF) CHS – Award #1956021

Bio-Behavioral Data Analytics to Enable Personalized Training of Veterans for the Future Workforce |

|

|

This project promotes fair and ethical treatment of veterans in the future job landscape by providing the empirical knowledge needed to remove implicit bias and misconceptions against veterans and prepare veterans for obtaining and maintaining competitive positions in the future workforce. We are gathering empirical evidence to understand veterans’ common feelings, thoughts, and potential weaknesses in social effectiveness skills during the civilian job interviews. We are further designing a preliminary assistive technology enabled by artificial intelligence for promoting veterans’ interview skills in a tailored and inclusive manner, ultimately preparing them for the future workforce and broadening their participation in fields where they are traditionally underrepresented, such as computing. Please follow this link for more information.

Student researchers: Ellen Hagen, Ehsanul Haque Nirjhar, Md Nazmus Sakib |

Related Publications:

|

|

National Institutes of Health (NIH) – 1R42MH123368-01

The Development and Systematic Evaluation of an AI-Assisted Just-in-Time Adaptive Intervention for Improving Child Mental Health |

|

|

Early intervention of maladaptive family relationships is thus crucial for preventing or offsetting negative developmental trajectories in at-risk children. Just-in-time adaptive interventions (JITAIs) use smartphones, wearables, and artificial intelligence (AI) to identify and respond to psychological processes and contextual events as they unfold in everyday life. In collaboration with The Technological Interventions for Ecological Systems (TIES) Lab at UT Austin and the Signal Analysis and Interpretation Laboratory at USC, this research project builds and tests a JITAI to provide opportune supports to families in dynamic response to contextual events and shifting psychological states to amplify attachment, regulate emotion, and intervene in maladaptive parent-child interactional patterns. More information can be found here.

Student researchers: Abdullah Aman Tutul |

Engineering Information Foundation (EiF) – Grant 18.02

In-the-Moment Interventions for Public Speaking Anxiety |

|

|

Public speaking skills are essential to help people effectively exchange ideas, persuade, and make tangible impact, and comprise a major factor of academic and professional success. A major cause of anxiety during public speaking is related to the novelty and uncertainty of the task, which can be alleviated through the exposure to public speaking experiences and gradual change of the negative perception related to this situation. The goal of this research is the design of in-the-moment virtual-reality interventions for public speaking that can predict momentary anxiety from bio-behavioral signals and automatically provide personalized feedback. You can see this video for more information.

Student researchers: Megha Yadav, Ehsanul Haque Nirjhar, Kexin Feng, Jason Raether |

Related Publications:

|

|

Texas A&M PESCA Research Seed Grant Program

Privacy-Preserving Emotion Recognition |

|

| Voice-enabled communication is a major part of today’s cyberspace and relies on the transmission and sharing of speech signals. Monitoring speech patterns can significantly benefit people’s lives, since it can help tracking, predicting, and potentially intervening on individuals’ physical and mental health. Yet, the sensitivity and privacy of information included in speech renders its sharing an irresistible trend for commercial, political, and cultural purposes–often with malicious intentions. This work develops computational models of speech capable to preserve facets of information related to the human state (e.g., affect, pathology, emotion), while eliminating speech-dependent information related to the identity of the speaker.

Student researchers: Vansh Narula |

|

Related Publications:

|

|

Texas A&M Innovation[X] Program

Adaptive Responsive Environments |

|

| Light, colors, smells, and noises are several of the environmental factors that consciously or unconsciously affect our mood, cognition, performance, or even physical and emotional health. For individuals with neurological abnormalities, such as children with autism spectrum disorders (ASD), environmental discomfort yielding from noises, scents, light, and heat, can feel like a continuous bombardment. The environmental sensation is different for each person and a single condition cannot fit all individuals, therefore outlining the need of personalized solutions. In collaboration with Mechanical Engineering, Construction Science, and Psychological & Brain Sciences, we aim to design an intelligent and adaptive indoor living space which can continuously and unobtrusively “sense” each individual’s neuro-physiology, and then seamlessly and intuitively adjust the local environment (e.g., temperature, light) in a unique and personalized way to mitigate negative outcomes (e.g., increased stress). You can find more information on the project website and this video.

Student researchers: Shravani Sridhar |

|

Crowd-Biosensing Of Physical And Emotional Distress For Walkable Built EnvironmentTexas A&M X-Grant Texas A&M Triads for Transformation (T3) Program |

|

|

In collaboration with the Smart and Sustainable Construction (SSC) Research Group directed by Dr. Changbum R. Ahn, the goal of this project is to detect locations of emotional and physical distress in the built environment. Our team develops signal processing and machine learning algorithms in order to quantify pedestrians’ collective distress from physiological signals collected from wearable devices.

Student researcher: Prakhar Mohan, Jinwoo Kim, Ehsanul Haque Nirjhar |

Related Publications:

|

|

Intelligence Advanced Research Projects Activity (IARPA) – #2017-17042800005

TILES – Tracking Individual Performance with Sensors |

|

| In collaboration with the Signal Analysis and Interpretation Laboratory directed by Dr. Shri Narayanan, the purpose of this study is to understand how individual differences, mental states, and well-being affect job performance, by collecting physical information through the use of wearable sensors, environmental information through the use of environmental sensors, and behavioral information through the use of surveys. More information about this project can be found here.

Student researcher: Projna Paromita (Psyche) |

|

Related Publications:

|

|

Biomedical/physiological signal processing for wearable technology |

|

|

Wearable biometric sensors are being increasingly embedded into our everyday life yielding large amounts of biomedical/physiological data, for which the presence of human experts is not always guaranteed. These underline the need for robust physiological models that efficiently analyze and interpret the acquired signals with applications in daily life, well-being, healthcare, security, and human-computer interaction. The goal of this research is the development of robust algorithms for reliable representation and interpretation of biomedical/physiological signals and their co-evolution with other signal modalities and behavioral indices, centered around three main axes.

Student researcher: Projna Paromita (Psyche) |

Related Publications:

|

|

Acoustic analysis of emotion and behavior |

|

| Acoustic aspects of speech, such as intonation and prosody, are linked to emotion, affect and several psychopathological factors. We have analyzed non-verbal vocalizations (e.g. laughters) in terms of children’s engagement patterns. We have further explored the use of transfer learning techniques for leveraging the abundance of publicly available data. Finally, the co-regulation of acoustic patterns between children has been studied in relation to their engagement levels during speech-controlled interactive robot-companion games.

Student researcher: Kexin Feng |

|

Related Publications:

|

|