We are releasing an updated version of VerBIO dataset. This version includes the moment-to-moment (i.e., time-continuous) ratings of stress from four annotators and their aggregated ratings that can be used for continuous stress detection. For the updated version of the dataset, please visit https://sites.google.com/colorado.edu/verbio-dataset.

What’s new in the updated version?

- Time continuous ratings of stress are added in Annotation folder that can be used for continuous stress detection. Train-test split information for this use case is also provided.

- All features and signals are in .csv format instead of .xlsx format.

- Physiological signals for the relaxation and preparation time are provided for TEST sessions.

- Actiwave features in the Features folders are updated.

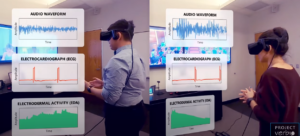

The VerBIO dataset is a multimodal bio-behavioral dataset of individuals’ affective responses while performing public speaking tasks in real-life and virtual settings. This data has been collected as part of a research study (EiF grant 18.02) jointly performed by HUBBS Lab and CIBER Lab at Texas A&M University. The aim of this study is to understand the relationship between bio-behavioral indices and public speaking anxiety in both real world and the virtual learning environments. Currently, this dataset contains audio recordings, physiological signals, and self-reported measures from 344 public speaking sessions. During these sessions, 55 participants delivered short speeches on a given topic from newspaper articles, in front of a real or virtual audience. You can find more details on the dataset in the following paper:

M. Yadav, M. N. Sakib, E. H. Nirjhar, K. Feng, A. Behzadan and T. Chaspari, “Exploring individual differences of public speaking anxiety in real-life and virtual presentations,” in IEEE Transactions on Affective Computing, doi: 10.1109/TAFFC.2020.3048299.

We are releasing the first version of VerBIO dataset. This version includes the audio recording, physiological signal time-series from wearable sensors (i.e., from Empatica E4 and Actiwave), and self-reported measures (e.g., trait anxiety, state anxiety, demographics). The future versions of the data will contain the transcripts and the third-party annotation of the moment-by-moment anxiety of the participants while delivering the speech.

Related Publications:

- Yadav et al., “Exploring individual differences of public speaking anxiety in real-life and virtual presentations,” IEEE TAFFC 2021

- von Ebers et al., “Predicting the Effectiveness of Systematic Desensitization Through Virtual Reality for Mitigating Public Speaking Anxiety,” ACM ICMI 2020

- Nirjhar et al., “Exploring Bio-Behavioral Signal Trajectories of State Anxiety During Public Speaking,” IEEE ICASSP 2020

- Yadav et al., “Virtual reality interfaces and population-specific models to mitigate public speaking anxiety,” IEEE ACII 2020 (nominated for best paper award)

- Yadav et al., “Speak Up! Studying the interplay of individual and contextual factors to physiological-based models of public speaking anxiety,” TransAI 2019 (invited paper)

Terms and Conditions:

This dataset will be provided to the requestor for academic research purposes only, after verifying their submitted form. This dataset can not be used for commercial purposes due to the privacy concerns of the participants of the research study. After receiving the data, the requestor can not redistribute or share the data with a third party, or put the data on a public website. If the requestor publishes their research work using this dataset, please cite the following paper:

M. Yadav, M. N. Sakib, E. H. Nirjhar, K. Feng, A. Behzadan and T. Chaspari, “Exploring individual differences of public speaking anxiety in real-life and virtual presentations,” in IEEE Transactions on Affective Computing, doi: 10.1109/TAFFC.2020.3048299.